Dec 19, 2023

Who this Blog for:

This blog is meant for IT Managers, CTOs and DevOps professionals who are looking to implement a new Salesforce DevOps process or improve their existing process to 10x their development efficiency.

I. Introduction

Brief overview of Salesforce Standard Development Tools and Challenges:

For much of Salesforce’s History, the Salesforce Org was the single Source of Truth for all development metadata and changesets were used to move the metadata from one org to another. Given that Salesforce was becoming more than a simple CRM, the old school software development challenges came into play, where bigger teams started running into version control issues, security risks and deployment challenges. All this has translated into decreased productivity, reduced collaboration, inconsistent environments and lack of visibility and transparency. By 2016, all these issues had made one Salesforce of the worst ranked platforms for scalable agile development. To address this, Salesforce created a Tiger Team from which the modern Salesforce DevOps process is born. Through multiple years of iteration and tooling, we are now in the modern era of Salesforce DevOps, where it is as good or better than other technologies. When a Salesforce DevOps Solution is properly implemented, teams can optimize their development and testing process, fostering seamless collaboration and accelerating the delivery of high-quality releases. This, in turn, drives business success by enabling teams to build and respond to evolving market demands and needs ultimately increasing overall team ROI.

The Need for Salesforce DevOps and Importance of DevOps in Salesforce implementation and management:

At a high level here are the common challenges faced by companies lacking a fully functioning DevOps process:

Inefficient Development Process: Manual deployments, testing, and releases lead to wasted time and effort.

Version Control Issues: Conflicts, overwrites, and merge issues arise when multiple developers work on the same metadata.

Deployment Failures: Manual deployments lead to errors, downtime, and rollbacks.

Lack of Collaboration: Developers work in silos, leading to communication breakdowns and inconsistent development practices.

Insufficient Testing: Inadequate testing leads to bugs, errors, and poor-quality releases.

Security Risks: Unmanaged access, weak passwords, and outdated security settings lead to security breaches.

Compliance Issues: Non-compliance with regulatory requirements, such as GDPR, HIPAA, or CCPA, leads to legal issues.

Scalability Challenges: Inefficient development processes limit the ability to scale and meet growing business demands.

Lack of Visibility: Inadequate reporting and analytics make it difficult to gain insights into development performance and business outcomes.

Talent Attrition: Developers become frustrated with inefficient processes and leave the team.

Inconsistent Environments: Different environments (dev, uat, prod) have inconsistent metadata and settings.

Manual Data Management: Manual data migration and backup lead to errors and data loss.

Lack of Automation: Manual processes limit the ability to automate repetitive tasks and workflows.

Inadequate Backup and Recovery: Insufficient backup and recovery processes lead to metadata/data loss and prolonged downtime.

High Technical Debt: Accumulated technical debt leads to complex and difficult-to-maintain codebases.

In my interactions with executives over the years, I've observed that while many companies excel in addressing certain challenges, they still face shortcomings in others. My intention through this blog is to offer a comprehensive understanding of implementing a fully functional DevOps system that effectively addresses all of the challenges.

II. What is Salesforce DevOps?

What is Salesforce DevOps and what does it entail too?

At a high-level here is what DevOps entails to:

At the crux of Salesforce DevOps, or any DevOps for that matter is, the CI/CD model which enables to continuously plan, create, verify, deploy and monitor releases. The CI/CD model enables teams to deliver faster, more reliable, and higher-quality releases, fostering a culture of collaboration, innovation, and continuous improvement. By automating and streamlining the software development lifecycle, teams can respond quickly to changing requirements, improve customer satisfaction, and drive business success. In the following sections, we'll delve deeper into the functions of CI/CD and explore the tools required to implement a fully functional CI/CD DevOps process.

What are the components that make up CI/CD and their respective sub-components?

CI (Continuous Integration):

Continuous Integration (CI) is a software development practice that involves regularly integrating code changes into a central repository. The key components that make up Continuous Integration are:

Version Control:

Centralized Codebase: Use of a central repository where all the metadata and artifacts are housed, which helps teams in synchronizing their efforts and avoiding conflicts.

Branching and Merging: Developers use branching strategies (e.g., feature branches, bugfix branches) to work on different stories (i.e. features) independently before merging changes back into the main branch (often the master or main branch).

Tracking Changes: Tracking all changes made to the codebase, providing a history of modifications. This makes it easy to understand who made changes, why they were made, and when.

Documentation and Traceability: Integrations with Project Management tools such as Jira, Azure DevOps Boards to help document the purpose of changes, providing context and understanding for future reference and audit purposes.

Environment Strategy:

Need for Multiple managed environments to ensure:

Controlled Deployment: By deploying changes to progressively more production-like environments (e.g., development -> testing -> staging -> production), teams can identify and address issues at each stage, reducing the risk of deploying faulty code to production.

Isolation: Ensuring each environment is isolated to prevent interference. This means changes in one environment do not affect others until they are explicitly promoted.

Concurrent Development and Testing: Multiple environments allow development and testing activities to occur simultaneously without conflicts. For example, while testers are validating the current release in the staging environment, developers can continue working on new features in the development environment.

Security: Using separate environments helps ensure sensitive data is protected. For example, production data can be masked or replaced with synthetic data in testing and staging environments to comply with data privacy regulations.

Systematic Testing: Different environments enable systematic and comprehensive testing. Unit tests can be run in the development environment, integration tests in a testing environment, and full end-to-end tests in a staging environment.

Code Review:

Best Practices: Code reviews ensure that code adheres to coding standards and best practices, promoting consistency and readability across the codebase and organization.

Collaboration: Code reviews encourage collaboration and communication among team members, fostering a culture of collective code ownership and responsibility.

Knowledge Transfer: Code reviews facilitate the sharing of knowledge about the codebase, tools, and techniques among team members. This is particularly beneficial for onboarding new developers.

Skill Improvement: Routine code reviews provide developers with continuous feedback, allowing them to enhance their coding abilities and gain a deeper understanding of the codebase, leading to ongoing improvement.

Coding Standards: Code reviews enforce coding standards and guidelines, which leads to a more consistent and maintainable codebase.

Code Quality Checks:

Static Code Analysis: Tools that analyze the code for potential errors, code smells, and adherence to coding standards without executing it (e.g., SonarQube, CodeScan, Clayton, ApexPMD, CodeClimate, Codacy).

Security Scanning: Tools that scan for security vulnerabilities within the code (e.g., Snyk, Checkmarx, Chimera, Fortify, DigitSec).

Performance & Penetration Testing: Although it is part of the standard DevOps Practice, performance and penetration testing on the Salesforce Platform is prohibited unless previously scheduled with Salesforce, thus there aren’t tools that have been developed specially for the Salesforce Platform. Most tools try to emulate potential problems, they aren’t perfect but that is as close as we can get with performance and Penn testing.

Continuous Monitoring:

Build Notifications: Alerts and notifications about the status of builds and tests, often integrated with collaboration tools like Slack or email.

Post-Deployment Checks: After new code is deployed, continuous monitoring ensures that the deployment has not introduced new issues and that the application continues to perform well.

Rollback Triggers: Automated monitoring can trigger rollbacks if critical issues are detected post-deployment, ensuring minimal disruption.

Incident Response: Integrates with incident management tools to streamline the response process and coordinate efforts across teams.

Trend Analysis & Performance Metrics: Monitoring key performance indicators (KPIs) and analyzing historical data helps identify trends, predict future issues, and plan for capacity needs.

CD (Continuous Delivery):

Continuous Delivery (CD) is a software development practice that involves delivering small, incremental changes to production in a timely and reliable manner. The key components and subcomponents that make up Continuous Delivery are:

Automated Builds:

Consistency and Reliability: Automated builds ensure that the same source code will produce the same results eliminating human error, in the context of Salesforce, a same Apex class deployed between environments should produce the same errors assuming the data is in sync

Immediate Validation: Automated builds are triggered by code commits, providing immediate feedback to developers about potential errors. This rapid feedback loop helps developers detect and fix issues early.

Resource Optimization: Automated build systems can efficiently manage and allocate resources, optimizing the build process, especially when multiple developers are deploying at the same time.

Integration with Automated Testing: Automated builds can be integrated with automated testing frameworks to run unit tests, integration tests, and other types of tests as part of the build process. This ensures that code changes do not introduce new bugs.

Environment Parity: Automated builds help ensure that the same build artifacts are deployed across different environments (development, testing, staging, production), reducing environment-specific issues.

Automated Release Process: Streamlined and automated process for releasing software to production, minimizing manual steps and reducing the risk of human error.

Test Automation:

Unit Tests: Small, fast tests that check individual components of the code for correctness.

Integration Tests: Tests that ensure different modules or services work together as expected.

Functional Tests: Tests that validate the functionality of the software from an end-user perspective.

Application Architecture:

Perhaps the most misunderstood component of them all is Application Architecture, a simple problem that seems to break pipelines might sound far -fetched but it regularly happens in enterprise today. The crux of this problem is because so little attention has been paid to Application Architecture. Application architecture is foundational to the success of Continuous Delivery. It impacts everything from modularity, scalability, and testability to deployment strategies and monitoring. A well-architected application not only simplifies the implementation of CD practices but also enhances the overall efficiency, reliability, and speed of delivering software. Investing in good application architecture is, therefore, essential for achieving the full benefits of Continuous Delivery.

The Hidden CD (Continuous Development):

Perhaps the least discussed CD is Continuous Development. To be able to achieve Continuous Development, an organization has to get CI (Continuous Integration) and CD (Continuous Delivery) done perfectly. Continuous Development is a comprehensive approach to software development that integrates Continuous Integration, Continuous Delivery, and Continuous Monitoring into a cohesive process. By automating and streamlining every stage of the software development lifecycle, Continuous Development ensures that software can be developed, tested, and released quickly, reliably, and sustainably. This practice is essential for modern software development, enabling teams to deliver high-quality software at a rapid pace.

Ideal Size Team Where DevOps Really matters:

Before embarking on a DevOps implementation, it's essential to consider a crucial question: what is the optimal team size for DevOps Solution? While the answer varies significantly based on the organization and its needs—such as whether it is a high-transparency organization like a government entity with frequent audits, or a high-growth company anticipating substantial expansion of its development team in the coming quarters—I can broadly categorize this into three groups:

Small teams (1-4 people): For a team of this size, I generally would not recommend a DevOps solution, as the cost and maintenance of such a solution would far outweigh the potential benefits received. The most fitting comparison I can make is that it's like procuring a high-performance F-16 fighter jet when the team's needs are more akin to a small, agile Cessna aircraft. In other words, it's overkill to bring a powerful and complex solution when a simpler, more lightweight approach like the Salesforce default changeset or free solutions like Copado Essentials (ClickDeploy) would suffice.

Medium teams (5-9 people): This is the ideal team size at a minimum before I would consider implementing DevOps Solutions. At this stage, the complexity and challenges grow between teams and implementing a good DevOps solution allows for a good balance between collaboration, quality, reporting, and scalability.

Large teams (10 or greater): At this category, DevOps is an absolute must, as it facilitates efficient collaboration, automates repetitive tasks, ensures consistent deployment processes, and helps manage the complexity that comes with a larger number of contributors. Implementing DevOps practices in large teams enhances productivity, improves code quality, and reduces the time it takes to deliver new features and updates.

Ideal Number of Projects Where DevOps Really matters:

Another angle to consider, besides team size, is the number of projects in the pipeline. Each project can be defined as a separate function that is completely unrelated. For example, there could be one team that is selling the core product and a separate team that handles insurance and protection related to the product. When dealing with multiple, unrelated projects within an organization, the complexity of managing these projects increases significantly. Each project often has its unique requirements, dependencies, and timelines, which can lead to potential conflicts if not managed correctly. By implementing separate DevOps pipelines for each project, organizations can isolate the issues to that team to get faster resolution. Here is the ideal project size to consider

Small projects (1-2 projects): For a small number of projects, a basic out of box Salesforce setup might be sufficient. This could involve using Salesforce Change Sets or Copado Essentials, along with manual testing and deployment processes.

Medium projects (3-4 projects): As the number of projects increases, the need for a more robust DevOps solution becomes apparent. At this stage, the need for bare-bones DevOps tools is necessary for the org to be efficient.

Large projects (5 or more projects): With a large number of projects, a comprehensive DevOps solution is essential. This should include a robust CI/CD pipeline, automated testing at all levels (unit, integration, and end-to-end), and comprehensive monitoring and alerting. A mature DevOps practice can help manage the complexity of multiple projects, ensuring that changes are deployed smoothly and reliably.

Tools and Technologies:

Source control systems:

Git: Distributed version control system commonly used for source code management. (e.g., GitLab, GitHub, Azure DevOps, BitBucket)

Subversion (SVN): Centralized version control system. (e.g., Apache Subversion, TeamCity)

Metadata Management and Deployment:

Though a comparison chart would be apt to compare and contrast these tools below, the below all achieve the same end goal of deploying metadata to Salesforce orgs, each with varying degrees of automation, control, and ease of use.

Salesforce DX (SFDX): Salesforce's own command-line interface (CLI) and set of tools designed to improve the development and deployment experience.

Gearset: A powerful DevOps solution for Salesforce, offering features like comparison and deployment, backup and recovery, and automated testing.

Copado: A comprehensive DevOps platform tailored for Salesforce, providing end-to-end automation of the release process.

AutoRABIT: Another popular Salesforce DevOps platform with features like version control, deployment automation, and testing.

Flosum: A Salesforce release management solution focused on compliance and governance.

Metazoa: A Salesforce DevOps platform offering tools for metadata management, org visualization, data backup and migration, and streamlined release management.

Copado Essentials (formerly ClickDeploy): Provides a simple, user-friendly interface for deploying changes between Salesforce orgs.

Blue Canvas: A Git-based Salesforce DevOps platform that simplifies version control, metadata backups, sandbox comparisons, and deployments

Continuous Integration and Continuous Delivery (CI/CD):

Jenkins, CircleCI, Travis CI, Bamboo: These general-purpose CI/CD tools can be configured to work with Salesforce deployments.

Gearset CI/CD, Copado CI/CD, AutoRABIT CI/CD: These platforms offer built-in CI/CD capabilities specifically for Salesforce.

Testing and Quality Assurance:

Provar: A test automation tool designed for Salesforce, enabling the creation and execution of automated tests.

Selenium: A popular open-source framework for web browser automation, which can be used for Salesforce UI testing.

ApexUnit: Salesforce's built-in unit testing framework for Apex code.

Testsigma: A low-code, test automation platform that accelerates and simplifies Salesforce testing, allowing users to create reliable tests in plain English.

Copado Robotic Testing: Automates end-to-end testing for Salesforce applications, ensuring quality and performance by simulating user interactions and validating workflows.

AccelQ: AccelQ is an AI-powered codeless test automation platform designed for Salesforce, providing end-to-end testing capabilities to improve software quality and accelerate releases.

testRigor: testRigor is a free-flowing plain English build test automation that can perform unit tests, Integration tests and End-to-end tests

Katalon: Katalon is a comprehensive software quality management platform that simplifies testing through a user-friendly interface and powerful automation features whilst also creating robust reporting tools.

Leapwork: Leapwork is a no-code test automation platform that enables users to create and maintain automated tests for various applications and technologies using a visual, drag-and-drop interface.

Tricentis: Tricentis is an enterprise test platform that has low-code test creation capabilities and prebuilt templates for fast test case creation.

Monitoring:

New Relic, AppDynamics: Application performance monitoring (APM) tools that can be used to monitor the performance of Salesforce applications.

Splunk, Sumo Logic, ELK: Log aggregation and analysis tools that can be used to collect and analyze Salesforce logs.

Data backup and Sandbox seeding:

OwnBackup: A popular backup and recovery solution specifically for Salesforce. It offers features like automated backups, granular recovery, sandbox seeding, and comparison tools.

Gearset: A DevOps platform for Salesforce that also includes robust backup and recovery capabilities. It offers flexible backup schedules, point-in-time recovery, and data comparison features.

Spanning Backup: Another popular Salesforce backup solution that provides automated backups, granular recovery, and security features like encryption and compliance controls.

AvePoint: A comprehensive backup and recovery platform supporting Salesforce and other SaaS applications. It offers features like automated backups, granular recovery, and eDiscovery.

CloudAlly: A cloud-based backup and recovery solution that offers automated backups, granular recovery, sandbox seeding, and comparison tools to protect and manage your Salesforce data.

Skyvia: A cloud-based data integration platform that simplifies data loading, synchronization, backup, and management between Salesforce and various data sources.

Odaseva: A data protection platform that provides enterprise-grade backup, archiving, and compliance solutions to ensure the security and integrity of your Salesforce data.

Data anonymization:

Odaseva: Odaseva provides a comprehensive platform for data governance, backup, and archiving. It includes data anonymization features that help organizations comply with data privacy regulations by masking or anonymizing sensitive data.

OwnBackup: OwnBackup is a popular backup and recovery solution for Salesforce. It also offers data anonymization capabilities to protect sensitive information in sandbox environments, ensuring that data privacy is maintained during development and testing.

Salesforce Shield: Salesforce Shield includes features like Platform Encryption, Event Monitoring, and Field Audit Trail. While it focuses more on security and compliance, it can be used along with other tools to anonymize data by encrypting sensitive information.

Gearset: Gearset is known for its deployment and CI/CD capabilities but also offers data management tools, including data masking features that allow for the anonymization of sensitive data during sandbox seeding.

Salesforce DataMask: DataMask is a Salesforce-native tool designed specifically for data anonymization and obfuscation. It helps protect sensitive data in Salesforce sandbox environments by masking personally identifiable information (PII) and other sensitive data fields.

Cloud Compliance by IBM: IBM’s Cloud Compliance solution offers data masking and anonymization services that can be integrated with Salesforce to protect sensitive information and ensure compliance with data privacy regulations.

Delphix: Delphix provides a data platform that includes data masking capabilities. It helps secure sensitive information by replacing it with realistic, yet fictitious data, making it a suitable choice for anonymizing data in Salesforce environments.

Vormetric Data Security Platform by Thales: Vormetric offers data masking and tokenization solutions that can be used to anonymize sensitive data within Salesforce. It helps meet compliance requirements and protect data privacy.

DataGroomr: DataGroomr offers a range of data management tools for Salesforce, including data deduplication and data anonymization. It ensures that sensitive information is protected while maintaining data integrity.

MuleSoft Anypoint Platform: MuleSoft’s Anypoint Platform, which is part of Salesforce, includes capabilities for data integration and transformation. It can be used to anonymize data as it moves between systems, ensuring that sensitive information is masked during data transfers.

Collaboration and Documentation Tools:

Confluence: Atlassian’s collaboration tool for documentation and team collaboration.

JIRA: An issue and project tracking tool that integrates with Salesforce for managing development tasks and sprints.

Security Tools:

Salesforce Shield: Security services including event monitoring, field audit trail, and platform encryption.

Aqua Security: Provides security for containerized environments, which can be relevant for Salesforce applications using containerized CI/CD processes.

Snyk: A developer-first security platform that seamlessly integrates with your development workflow to identify and fix vulnerabilities in open-source dependencies and custom code. Snyk's Salesforce scanning capabilities extend to Apex, Visualforce, and Lightning components.

Checkmarx: A static application security testing (SAST) tool that scans your Salesforce codebase for vulnerabilities, including those related to injection attacks, cross-site scripting (XSS), and insecure configuration. Checkmarx provides detailed reports and remediation guidance to help you address security risks.

Chimera: A Salesforce-specific security scanner that focuses on identifying vulnerabilities in Apex code, configurations, and user permissions. Chimera provides actionable insights and recommendations to strengthen your Salesforce security posture.

Fortify: A comprehensive application security solution that offers both static and dynamic analysis (SAST/DAST) capabilities. Fortify can scan your Salesforce applications for a wide range of vulnerabilities, including those related to authentication, authorization, and data validation.

DigitSec: A runtime application self-protection (RASP) solution that monitors your Salesforce applications in real time to detect and block attacks. DigitSec can also identify and alert you to potential vulnerabilities before they are exploited.

Code Quality and Static Analysis Tools:

SonarQube: A widely used open-source platform for continuous code quality inspection. It supports Apex and Visualforce, providing insights on code complexity, duplication, potential bugs, and security vulnerabilities. It integrates well with popular CI/CD pipelines.

CodeScan: A comprehensive static code analysis tool designed specifically for Salesforce. It offers a wide range of rules and checks for security, performance, best practices, and compliance. It also provides detailed reports and integrates with IDEs and version control systems.

Clayton: A Salesforce-native static analysis tool that focuses on identifying security vulnerabilities and compliance issues. It analyzes Apex, Visualforce, Lightning components, and other metadata types to detect potential risks.

ApexPMD: An open-source static analysis tool based on the popular Java tool PMD. It analyzes Apex code for common coding errors, unused variables, inefficient algorithms, and other potential issues.

CodeClimate: A cloud-based platform for automated code review. It integrates with various version control systems and supports multiple programming languages, including Apex. It provides feedback on code quality, style, and potential issues.

Codacy: Another cloud-based code analysis platform that supports Apex and other languages. It provides insights on code quality, security, and complexity. It also offers code review automation and integration with popular development tools.

The True North Star:

With these DevOps tools implemented, most organizations aim to deliver high-quality software faster and more reliably, with the goal of achieving a state of continuous improvement and delivery, where releases become smaller, faster, and more predictable, and the time and effort required to deliver new functionality to end-users is significantly reduced.

Back to Reality:

Having done close to 20+ DevOps implementations, achieving the north star is possible. But the goal of delivering software immediately after development is a fallacy. I’ve worked with high-tech companies where they have implemented this process of developing a User Story and deploying to lower sandboxes for all the necessary testing and approvals; and deploying into prod immediately after. This process works in practice faces several challenges as it:

Assumes that all these tools work synchronously all the time without a glitch

Assumes the story is as intended, meaning all the requirements have been properly communicated to the developer

Assumes that testing is comprehensive and covers all scenarios

Assumes that all stakeholders are aligned and aware of the changes

Assumes that rollback plans are in place and easily executable

Assumes that monitoring and logging are actively monitored to detect issues quickly

These assumptions are often not met in real-world scenarios, leading to deployment failures, time consuming rollbacks, and even worse: potential downtime. It is only realistic to assume smaller, faster, and more predictable up till UAT right before production. A more pragmatic approach to DevOps considers these potential pitfalls and incorporates a good time buffer for successful Production deployments. Even with all these tools implemented, the most successful release cycle is every week where the previous Monday to Friday stories would be deployed on the following Wednesday. What this means for the enterprise is extremely high quality / high conviction changes being deployed to production with minimal rollback. In fact, one of my clients who implemented a comprehensive DevOps strategy hasn't experienced a rollback in over three and a half years, with no signs of interruption to this remarkable trend.

III. Benefits of Salesforce DevOps

Improved Collaboration and Communication Between Teams:

Salesforce DevOps fosters a culture of collaboration by breaking down silos between development, operations, and business teams. It leverages tools and practices that promote transparency, facilitate real-time communication, and ensure that all stakeholders are aligned on project goals and timelines. This enhanced collaboration leads to more cohesive and efficient teams, ultimately driving better project outcomes.

Faster Time-to-Market and Increased Efficiency:

Adopting DevOps practices in Salesforce development accelerates the software delivery lifecycle. By automating repetitive tasks such as testing, integration, and deployment, teams can reduce the time required to deliver new features and updates. This accelerated delivery cycle allows organizations to respond rapidly to market changes, gain a competitive edge, and deliver new features and enhancements more frequently.

Enhanced Quality and Reduced Errors:

DevOps emphasizes continuous integration and continuous delivery (CI/CD), which involves regular automated testing and code reviews. These practices help in identifying and resolving issues early in the development process, leading to higher-quality releases with fewer errors. Automated testing ensures that each change is thoroughly vetted before it reaches production, thereby reducing the likelihood of defects.

Increased Customer Satisfaction and Loyalty:

By delivering high-quality software more quickly and reliably, Salesforce DevOps enables organizations to meet and exceed customer expectations. Frequent and reliable releases mean that customers receive new features, improvements, and bug fixes regularly. This responsiveness to customer needs enhances satisfaction and builds loyalty, as clients can trust that their feedback will be promptly addressed.

Enhanced Security:

DevOps integrates security into the development process (DevSecOps), ensuring that security is addressed from the start. On top of that, real-time monitoring and alerting help identify and mitigate security threats more quickly.

Reduced Downtime and Faster Recovery:

In case of deployment failures, automated rollback mechanisms can restore previous versions quickly, minimizing downtime. Continuous monitoring and proactive maintenance reduce the risk of unexpected outages and improve system resilience.

Better Compliance and Auditability:

Automated processes provide detailed logs and audit trails, making it easier to follow regulatory requirements. Consistent, repeatable processes ensure compliance standards are met consistently.

IV. Challenges in Implementing Salesforce DevOps

Common Obstacles and Pain Points

Implementing Salesforce DevOps is not without its challenges. Organizations often encounter various obstacles, including:

Cultural Resistance: Shifting to a DevOps culture requires changes in mindset and working practices, which can be met with resistance from team members accustomed to traditional methods. Breaking down existing silos and fostering collaboration between traditionally separate teams can be challenging.

Tool Integration: Integrating various DevOps tools with existing Salesforce environments can be complex and may require significant customization. Ensuring that all tools work well together and with Salesforce's unique environment requires careful planning and testing.

Skill Gaps: DevOps requires a blend of skills in development, operations, and automation. Finding or training personnel with the right skill set can be challenging.

Security and Compliance: Ensuring that DevOps practices comply with organizational security policies and regulatory requirements adds another layer of complexity. Integrating security into the DevOps pipeline (DevSecOps) requires additional tools and practices to identify and mitigate vulnerabilities.

Overcoming Resistance to Change and Cultural Shifts

Addressing resistance to change is crucial for the successful implementation of Salesforce DevOps. Strategies to overcome this resistance include:

Leadership Support: Strong endorsement from leadership can help drive the cultural shift required for DevOps. Leaders should communicate the benefits clearly and provide the necessary resources and support.

Training and Education: Providing comprehensive training programs can help bridge skill gaps and ease the transition. Continuous learning opportunities ensure that team members stay updated with the latest DevOps practices and tools.

Incremental Implementation: Gradually introducing DevOps practices rather than a wholesale change can help teams adapt more comfortably. Start with small, manageable projects to demonstrate success and build confidence.

Collaboration Tools: Utilizing collaboration tools that facilitate communication and transparency can help break down silos and promote a more cohesive working environment.

By addressing these challenges thoughtfully and strategically, organizations can successfully implement Salesforce DevOps, reaping its numerous benefits while minimizing the associated risks.

V. Best Practices for Salesforce DevOps Implementation

Establishing a Center of Excellence (CoE):

Establishing a Center of Excellence (CoE) brings forth centralized governance, guaranteeing that best practices and standards are consistently applied across all DevOps activities. This ensures uniformity and quality in DevOps processes, leading to increased efficiency and effectiveness. By establishing clear guidelines and protocols, the CoE helps maintain a structured and disciplined approach to DevOps, which is essential for achieving optimal performance and reliability in software development and deployment.

A CoE facilitates knowledge sharing and training, empowering teams to stay updated with the latest DevOps trends and tools. Through regular workshops, seminars, and documentation, team members gain insights into emerging technologies and methodologies, enabling them to adapt quickly to changing requirements. This fosters a culture of continuous learning and innovation within the organization.

A CoE drives continuous improvement by regularly reviewing processes, tools, and metrics to identify areas for enhancement. By analyzing performance data, conducting retrospectives, and gathering feedback from stakeholders, the CoE identifies bottlenecks, inefficiencies, and finds opportunities for optimization. This iterative approach ensures that DevOps practices are constantly refined, resulting in improved delivery outcomes and customer satisfaction.

Sandbox Strategy & Scratch Orgs:

Need for Sandboxes:

In the context of a robust Salesforce DevOps implementation, utilizing multiple sandboxes is crucial for maintaining environment consistency. By creating distinct development, testing, and staging environments, organizations can ensure that each phase of the development lifecycle operates within a controlled and predictable environment. This approach minimizes the risk of unforeseen issues arising from environment discrepancies, ultimately leading to more reliable deployments.

Isolated testing environments play a key role in this strategy by preventing changes in one environment from inadvertently impacting others. By isolating testing environments, teams can conduct comprehensive and focused testing specific to their project requirements, free from the interference of unrelated changes. This isolation is essential for identifying and addressing issues early in the development process, thereby improving overall code quality.

Effective data management practices are also integral to this strategy. Maintaining data consistency and integrity across various environments is critical to ensuring that test results are reliable and reflective of real-world scenarios. Implementing robust data management protocols helps in synchronizing data across development, testing, and staging environments, reducing the risk of data-related issues during deployment. By prioritizing these practices, organizations can enhance their Salesforce DevOps processes, leading to smoother and more successful releases.

Role of Scratch Orgs:

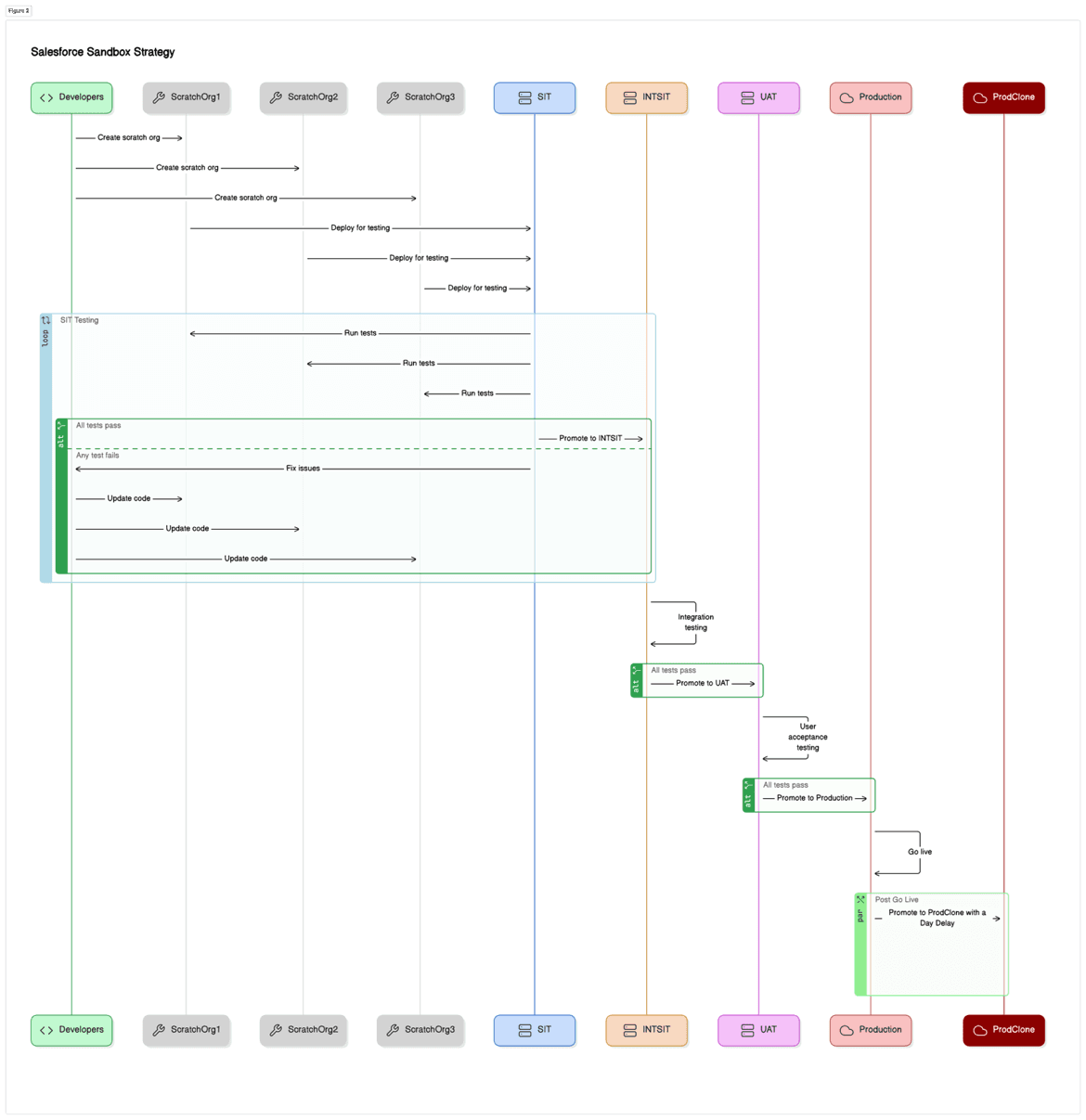

Though the sandbox strategy changes drastically by team size, the idea of one Sandbox per developer isn’t very practical as the amount of work to maintain all these sandboxes quickly falls apart for large teams. A better approach would be to spin up scratch orgs for feature driven development and sandboxes for more persistent needs such as an integration or test environment. Here is how I would structure the sandboxes:

The Salesforce development process depicted in this flow outlines a structured approach to ensuring high-quality software releases through a series of carefully managed stages, that I regularly recommend to most of my clients. Initially, developers create individual scratch orgs for their development work, where they can write and update code independently. This isolation allows for focused unit testing within each scratch org to verify functionality. Once development is complete, the code is deployed to the System Integration Testing (SIT) environment, where integration tests are conducted to ensure that all components work harmoniously. If any issues arise during this phase, developers return to their scratch orgs to make necessary fixes and redeploy the code until all tests in SIT pass successfully.

Following successful integration testing, the code is promoted to the Integration/SIT (INT/SIT) environment for comprehensive integration testing. Upon passing these tests, the code moves to the User Acceptance Testing (UAT) environment, where users perform acceptance testing to validate that the system meets their requirements. After PO approval in UAT, the code is deployed to the production environment for end users. A post-go-live phase includes promoting the changes to a ProdClone environment with a day delay to maintain a synchronized backup and potential disaster recovery. This process ensures that any potential issues are addressed at each stage, leading to reliable and efficient software delivery in the Salesforce ecosystem.

Need for Separate non-pipeline Sandboxes:

Separate non-pipeline sandboxes are essential for specific tasks that require dedicated, stable environments outside the regular CI/CD pipeline. These sandboxes serve various purposes, including testing the latest Salesforce releases and AppExchange packages. By isolating these activities, organizations can ensure that the core development and deployment processes remain unaffected by experimental or disruptive changes. For instance, when a new Salesforce release is announced, having a separate sandbox allows teams to thoroughly evaluate and understand the new features, assess any potential impact on existing systems, and prepare for a smooth transition when the update is eventually rolled out to production environments. Similarly, testing AppExchange packages in a non-pipeline sandbox ensures that these integrations do not interfere with ongoing development work, allowing for a thorough evaluation of their functionality and compatibility. By maintaining separate non-pipeline sandboxes, organizations can ensure that they have the flexibility to support specialized activities while maintaining the integrity and efficiency of their primary development and deployment processes.

Smart Pipeline:

Automated Workflows:

Design smart CI/CD pipelines to automate workflows, including code integration, testing, and deployment. This not only saves time but also ensures consistency and quality in the software development process.

Pipeline Optimization:

Continuously optimize pipelines to reduce bottlenecks and improve efficiency. An example could be only accounting for changes in the branch so that developers don’t have to keep updating the branches when prior changes are merged. This not only reduces the time spent on pipeline runs but also ensures that the pipelines are always up-to-date with the latest code changes.

Parallel Processes:

Use parallel processing to run multiple tests and deployments simultaneously, speeding up the release cycle. This is especially useful for large-scale projects with complex dependencies. Parallel processing can significantly reduce the time it takes to test and deploy new features, allowing teams to release software more frequently.

Selecting the Right Tools and Technologies:

When selecting the right tools and technologies, tool compatibility is a crucial consideration. It is important to choose tools that are compatible with Salesforce and can integrate well with existing systems. This ensures seamless communication and data exchange between different components, reducing the risk of data inconsistencies or disruptions in workflow. By selecting compatible tools, organizations can create a cohesive and efficient technology ecosystem that supports their business processes effectively.

Scalability is another key factor to consider when choosing tools and technologies. Organizations should select tools that can scale with their growth and evolving needs. As a business grows, its data volume, user base, and operational requirements may increase. Tools that are scalable can accommodate these changes without compromising performance or reliability. By investing in scalable tools, organizations can ensure their technology infrastructure can adapt to future demands, avoiding the need for costly and time-consuming migrations or upgrades in the long run.

User-friendliness is also a critical aspect to consider when selecting tools and technologies. Organizations should ensure that the tools they choose are user-friendly and easy to use. User-friendly tools encourage adoption and increase productivity, as users can quickly learn and master the tools' functionality. This reduces the need for extensive training and minimizes the risk of errors or misuse. Additionally, tools that are easy to use facilitate better collaboration and communication among team members, further improving the efficiency and effectiveness of DevOps processes.

Automating Testing and Deployment:

Comprehensive Testing: Implementing automated testing at all stages is crucial for ensuring the reliability and quality of software. This includes unit tests, which verify the functionality of individual components; integration tests, which ensure that different modules or services work together correctly; and functional tests, which validate the software from an end-user perspective. By automating these tests, organizations can quickly identify and address issues, reducing the likelihood of defects reaching production. Comprehensive testing provides a solid foundation for delivering high-quality software, as it ensures that all aspects of the application are thoroughly examined and validated before deployment.

Continuous Deployment: Using continuous deployment to automate the release process is essential for achieving quick and reliable deliveries. Continuous deployment automates the entire software release pipeline, from code commit to production deployment, allowing new features and updates to be delivered rapidly and consistently. This automation minimizes manual intervention, reducing the risk of human error and accelerating the release cycle. By ensuring that code changes are automatically tested and deployed as soon as they are ready, continuous deployment helps organizations respond swiftly to market demands and user feedback, maintaining a competitive edge.

Rollback Mechanisms: Implementing automated rollback mechanisms is vital for maintaining stability and reliability in the deployment process. In the event of deployment failures, automated rollback mechanisms allow the system to quickly revert to a previous stable version, minimizing downtime and mitigating the impact on users. This ensures that any issues introduced by new deployments can be swiftly resolved without significant disruption. By incorporating rollback mechanisms into the deployment pipeline, organizations can enhance their resilience and ensure that they can recover quickly from unforeseen problems, maintaining a high level of service availability and reliability.

Monitoring and Feedback Loops:

Real-time monitoring is a critical aspect of ensuring the optimal performance, security, and health of any application or system. By setting up real-time monitoring, organizations can proactively track and monitor key metrics and indicators to identify potential issues or anomalies in real-time. This enables teams to address problems promptly, minimize downtime, and maintain a seamless user experience.

Beyond performance and security, real-time monitoring also extends to system health, encompassing aspects like page performance, load times and usage statistics. By tracking these metrics, organizations can preemptively address issues that might affect the stability and availability of their applications. This proactive approach not only enhances the user experience by minimizing downtime and disruptions but also optimizes resource utilization, ensuring that the infrastructure supporting the applications is used efficiently and effectively.

Proactive alerting takes real-time monitoring one step further by actively notifying teams of potential issues before they impact users. By leveraging proactive alerting mechanisms, organizations can minimize the impact of problems on end-users, reduce the time to resolution, and prioritize resources effectively. Proactive alerts can be configured to trigger based on specific thresholds, performance metrics, or error logs, ensuring that teams are notified promptly and can initiate immediate action to mitigate any potential issues.

Finally, Integrating feedback loops to continuously gather and act on user feedback is a critical component of iterative product improvement. By systematically collecting feedback from users, organizations can gain valuable insights into how their applications are being used and where there are opportunities for enhancement. This feedback can be analyzed to identify common pain points, feature requests, and usability issues, providing a clear roadmap for future development. Acting on this feedback in a timely manner ensures that the product evolves in line with user needs and expectations, driving higher user satisfaction and loyalty. Continuous feedback integration promotes a cycle of constant improvement, enabling the organization to deliver a product that is both user-centric and high-performing.

Measure and Optimize:

Establishing clear metrics and key performance indicators (KPIs) is essential for measuring the success of DevOps initiatives. These metrics might include deployment frequency, which tracks how often new code is released; lead time for changes, which measures the time it takes from code commit to deployment; and mean time to recovery, which assesses how quickly the system recovers from failures. By defining these and other relevant metrics, organizations can objectively evaluate their DevOps performance, identify trends, and set benchmarks for improvement. Clear metrics provide a framework for understanding how well DevOps practices are working and where adjustments might be needed to achieve desired outcomes.

Using data-driven insights to make informed decisions is a cornerstone of effective DevOps management. By collecting and analyzing data from various stages of the development and deployment processes, organizations can gain valuable insights into performance, efficiency, and areas of risk. This data-driven approach enables teams to identify bottlenecks, predict potential issues, and make proactive adjustments to optimize workflows. Decisions grounded in data are more likely to lead to improvements in efficiency and effectiveness, as they are based on empirical evidence rather than intuition or guesswork.

Regularly reviewing performance metrics and feedback is crucial for fostering a culture of continuous improvement in DevOps practices. By consistently evaluating the effectiveness of current processes and tools, organizations can identify areas for enhancement and implement changes to drive better outcomes. This iterative process involves analyzing metrics, gathering feedback from stakeholders, and testing new approaches to find the most effective solutions. Continuous improvement ensures that DevOps practices remain dynamic and responsive to changing needs and challenges, ultimately leading to more efficient, reliable, and high-quality software delivery.

How A.I. will impact Salesforce DevOps?

Whilst every company is rebranding themselves as an A.I. company, none of the products I have tested so far are significantly better than a year ago when ‘A.I.’ (Large Language Models (LLMs)) weren't prevalent. A few of the products used Machine Learning and other A.I. techniques prior to the GPTs of the world, hence I believe that this will be a slow and iterative process to see any kind of product improvements. But by saying that, speaking with several product teams, A.I. has become a priority and every company is trying to incorporate it into their systems. In the three-to-five-year time horizon, I believe that every category in the Tools and Technologies section will use A.I which will make those individual categories better. There are some examples that product teams have been working on and how I think A.I. can improve DevOps:

Enhanced Automation and Efficiency:

Automated Code Reviews: AI can analyze code for potential bugs, vulnerabilities, and best practice adherence, providing automated feedback to developers. Tools like Codacy and SonarQube are integrating AI to improve code quality checks.

Intelligent Testing: AI-driven testing tools can automatically generate test cases, execute them, and analyze the results, significantly reducing the time and effort required for testing. This leads to faster and more reliable testing cycles.

Improved Decision Making:

Predictive Analytics: AI can analyze historical data to predict potential issues in the development and deployment process. For example, it can forecast the likelihood of deployment failures based on past trends, helping teams take proactive measures.

Resource Optimization: AI algorithms can optimize resource allocation, predicting the required computational power for various tasks, for example, A.I. could alert developers that a potential for Apex governor limits will be hit during the code execution.

Enhanced Security:

Threat Detection: AI can enhance security by identifying unusual patterns and potential threats in real-time. This includes detecting anomalies potential ways that LWC’s or Apex code be susceptible for SOQL attacks.

Automated Compliance: AI can help ensure compliance with regulatory requirements by continuously monitoring and auditing processes, identifying non-compliance issues, and suggesting corrective actions.

Predictive Maintenance and Monitoring:

Proactive Monitoring: AI can continuously monitor applications and infrastructure, predicting potential issues before they occur and recommending preventive actions. This reduces downtime and improves system reliability.

Anomaly Detection: AI algorithms can detect anomalies in application performance and user behavior, triggering alerts and initiating automated responses to mitigate issues quickly.

Intelligent CI/CD Pipelines:

Dynamic Pipelines: AI can optimize CI/CD pipelines by dynamically adjusting the workflow based on real-time data and historical trends. For example, it can prioritize critical deployments, optimize build times, and manage dependencies more effectively.

Self-Healing Pipelines: AI can enable self-healing capabilities in CI/CD pipelines, where the system can automatically detect and fix issues without human intervention, ensuring smooth and continuous delivery.

Multi-Project Pipelines: AI can intelligently manage multiple CI/CD pipelines for different projects within the same organization. It can allocate resources, balance workloads, and coordinate deployments across various projects to ensure that each pipeline operates efficiently without conflicts, facilitating seamless integration into the shared production environment.

Continuous Learning and Improvement:

Machine Learning Models: By integrating machine learning models, DevOps teams can continuously learn from past deployments, user feedback, and performance data to improve future releases.

Adaptive Systems: AI can create adaptive systems that evolve with changing requirements and environments, ensuring that the DevOps processes remain efficient and effective over time.

Again, this is more of a distant yet achievable dream within the next few years. We are not there yet but we will be soon, yet the traditional challenges of integrating these technologies to function cohesively will persist for the foreseeable future.

Conclusion:

Salesforce DevOps offers numerous benefits, including improved collaboration, faster time-to-market, enhanced quality, increased security, data backups and better compliance. By automating and streamlining development and deployment processes, DevOps enables teams to deliver high-quality software efficiently and reliably, driving business success.

Now is the time to embrace Salesforce DevOps and transform your development processes. By adopting best practices and leveraging the right tools and technologies, you can overcome common challenges and achieve 10x improvements in efficiency, quality, and speed.